Kubernetes Docker For Mac Mount Filesystem

The osxfs is a new shared file system solution, exclusive to Docker for Mac. Osxfs provides a close-to-native user experience for bind mounting macOS file system trees into Docker containers. Context Switching Made Easy under Kubernetes powered Docker for Mac 18.02.0. Docker Enterprise Edition is a subscription of software, support, and certification for enterprise dev and IT teams building and managing critical apps in production at scale. Docker EE provides a modern and trusted platform for all apps with integrated management and security across the app lifecycle, and includes three main technology components: the Docker daemon (fka.

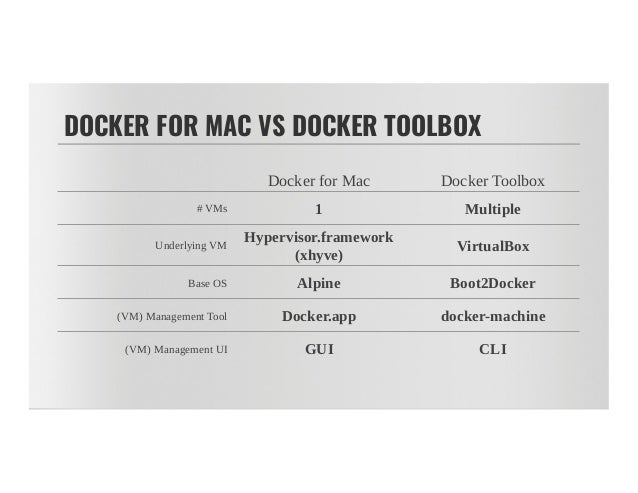

Docker is a full development platform for creating containerized apps, and Docker for Mac is the most efficient way to start and run Docker on your MacBook. It runs on a LinuxKit VM and NOT on VirtualBox or VMware Fusion. It embeds a hypervisor (based on xhyve), a Linux distribution which runs on LinuxKit and filesystem & network sharing that is much more Mac native. It is a Mac native application, that you install in /Applications. At installation time, it creates symlinks in /usr/local/bin for docker & docker-compose and others, to the commands in the application bundle, in /Applications/Docker.app/Contents/Resources/bin.

One of the most amazing feature about Docker for Mac is “drag & Drop” the Mac application to /Applications to run Docker CLI and it just works flawlessly. The way the filesystem sharing maps OSX volumes seamlessly into Linux containers and remapping macOS UIDs into Linux is one of the most anticipated feature.

Few Notables Features of Docker for Mac:

- Docker for Mac runs in a LinuxKit VM.

- Docker for Mac uses HyperKit instead of Virtual Box. Hyperkit is a lightweight macOS virtualization solution built on top of Hypervisor.framework in macOS 10.10 Yosemite and higher.

- Docker for Mac does not use

docker-machineto provision its VM. The Docker Engine API is exposed on a socket available to the Mac host at/var/run/docker.sock. This is the default location Docker and Docker Compose clients use to connect to the Docker daemon, so you to usedockeranddocker-composeCLI commands on your Mac. - When you install Docker for Mac, machines created with Docker Machine are not affected.

- There is no docker0 bridge on macOS. Because of the way networking is implemented in Docker for Mac, you cannot see a

docker0interface on the host. This interface is actually within the virtual machine. - Docker for Mac has now Multi-Architectural support. It provides

binfmt_miscmulti architecture support, so you can run containers for different Linux architectures, such asarm,mips,ppc64le, and evens390x.

Under this blog, I will deep dive into Docker for Mac architecture and show how to access service containers running on top of LinuxKit VM.

At the base of architecture, we have hypervisor called Hyperkit which is derived from xhyve. The xhyve hypervisor is a port of bhyve to OS X. It is built on top of Hypervisor.framework in OS X 10.10 Yosemite and higher, runs entirely in userspace, and has no other dependencies. HyperKit is basically a toolkit for embedding hypervisor capabilities in your application. It includes a complete hypervisor optimized for lightweight virtual machines and container deployment. It is designed to be interfaced with higher-level components such as the VPNKit and DataKit.

Just sitting next to HyperKit is Filesystem sharing solution. The osxfs is a new shared file system solution, exclusive to Docker for Mac. osxfs provides a close-to-native user experience for bind mounting macOS file system trees into Docker containers. To this end, osxfs features a number of unique capabilities as well as differences from a classical Linux file system.On macOS Sierra and lower, the default file system is HFS+. On macOS High Sierra, the default file system is APFS.With the recent release, NFS Volume sharing has been enabled both for Swarm & Kubernetes.

There is one more important component sitting next to Hyperkit, rightly called as VPNKit. VPNKit is a part of HyperKit attempts to work nicely with VPN software by intercepting the VM traffic at the Ethernet level, parsing and understanding protocols like NTP, DNS, UDP, TCP and doing the “right thing” with respect to the host’s VPN configuration. VPNKit operates by reconstructing Ethernet traffic from the VM and translating it into the relevant socket API calls on OSX. This allows the host application to generate traffic without requiring low-level Ethernet bridging support.

On top of these open source components, we have LinuxKit VM which runs containerd and service containers which includes Docker Engine to run service containers. LinuxKit VM is built based on YAML file. The docker-for-mac.yml contains an example use of the open source components of Docker for Mac. The example has support for controlling dockerd from the host via vsudd and port forwarding with VPNKit. It requires HyperKit, VPNKit and a Docker client on the host to run.

https://gist.github.com/ajeetraina/ac983ea54d407ab82ba1f4d542d9c1b2

Sitting next to Docker CE service containers, we have kubelet binaries running inside LinuxKit VM. If you are new to K8s, kubelet is an agent that runs on each node in the cluster. It makes sure that containers are running in a pod. It basically takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn’t manage containers which were not created by Kubernetes.On top of Kubelet, we have kubernetes services running. We can either run Swarm Cluster or Kubernetes Cluster. We can use the same Compose YAML file to bring up both the clusters side by side.

Peeping into LinuxKit VM

Curious about VM and how Docker for Mac CE Edition actually look like?

Below are the list of commands which you can leverage to get into LinuxKit VM and see kubernetes services up and running. Here you go.

How to enter into LinuxKit VM?

Open MacOS terminal and run the below command to enter into LinuxKit VM:

$screen ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/tty

Listing out the service containers:

Earlier the ctr tasks ls used to list the service containers running inside LinuxKit VM but in the recent release, namespace concept has been introduced, hence you might need to run the below command to list out the service containers:

How to display containerd version?

Under Docker for Mac 18.05 RC1, containerd version 1.0.1 is available as shown below:

How shall I enter into docker-ce service container using containerd?

How to verify Kubernetes Single Node Cluster?

Interested to read further? Check out my curated list of blog posts –

Docker for Mac is built with LinuxKit. How to access the LinuxKit VM

Top 5 Exclusive Features of Docker for Mac That you can’t afford to ignore

5 Minutes to Bootstrap Kubernetes Cluster on GKE using Docker for Mac 18.03.0

Context Switching Made Easy under Kubernetes powered Docker for Mac 18.02.0

2-minutes to Kubernetes Cluster on Docker for Mac 18.01 using Swarm CLI

Docker For Mac 1.13.0 brings support for macOS Sierra, now runs ARM & AARCH64 based Docker containers

Docker for Mac 18.03.0 now comes with NFS Volume Sharing Support for Kubernetes

Did you find this blog helpful? Feel free to share your experience. Get in touch with me at twitter @ajeetsraina.

Best hearthstone deck tracker. A Deck Tracker for Mac OS X. Contribute to Jeswang/Hearthstone-Deck-Tracker-Mac development by creating an account on GitHub. Hearthstone Deck Tracker is a free app to help you play like the pros. R/hearthstone: For fans of Blizzard Entertainment's digital card game, Hearthstone. Hearthstone Deck Tracker for Mac update Hi peeps! So a few days ago I asked if people would be interested in me finishing, freshening up, and then releasing my deck tracker app for Mac right here. A deck tracker and deck manager for Hearthstone on macOS - HearthSim/HSTracker. A deck tracker and deck manager for Hearthstone on macOS - HearthSim/HSTracker. Skip to content. Features → Code review. Deck and stats tracking for Hearthstone. The latest version of HSTracker (macOS) should download automatically. If it does not, you can click here to download it. Support: hstracker@hearthsim.info - Back to the office.hstracker@hearthsim.info - Back to the office.

If you are looking out for contribution/discussion, join me at Docker Community Slack Channel.

Clap

Kubernetes 1.14: Local Persistent Volumes GA

Authors: Michelle Au (Google), Matt Schallert (Uber), Celina Ward (Uber)

The Local Persistent Volumesfeature has been promoted to GA in Kubernetes 1.14.It was first introduced as alpha in Kubernetes 1.7, and thenbeta in Kubernetes1.10. The GA milestone indicates that Kubernetes users may depend on the featureand its API for production use. GA features are protected by the Kubernetesdeprecationpolicy.

What is a Local Persistent Volume?

A local persistent volume represents a local disk directly-attached to a singleKubernetes Node.

Kubernetes provides a powerful volume plugin system that enables Kubernetesworkloads to use a widevarietyof block and file storage to persist data. Mostof these plugins enable remote storage – these remote storage systems persistdata independent of the Kubernetes node where the data originated. Remotestorage usually can not offer the consistent high performance guarantees oflocal directly-attached storage. With the Local Persistent Volume plugin,Kubernetes workloads can now consume high performance local storage using thesame volume APIs that app developers have become accustomed to.

How is it different from a HostPath Volume?

To better understand the benefits of a Local Persistent Volume, it is useful tocompare it to a HostPath volume.HostPath volumes mount a file or directory fromthe host node’s filesystem into a Pod. Similarly a Local Persistent Volumemounts a local disk or partition into a Pod.

The biggest difference is that the Kubernetes scheduler understands which node aLocal Persistent Volume belongs to. With HostPath volumes, a pod referencing aHostPath volume may be moved by the scheduler to a different node resulting indata loss. But with Local Persistent Volumes, the Kubernetes scheduler ensuresthat a pod using a Local Persistent Volume is always scheduled to the same node.

While HostPath volumes may be referenced via a Persistent Volume Claim (PVC) ordirectly inline in a pod definition, Local Persistent Volumes can only bereferenced via a PVC. This provides additional security benefits sincePersistent Volume objects are managed by the administrator, preventing Pods frombeing able to access any path on the host.

Additional benefits include support for formatting of block devices duringmount, and volume ownership using fsGroup.

What’s New With GA?

Since 1.10, we have mainly focused on improving stability and scalability of thefeature so that it is production ready.

The only major feature addition is the ability to specify a raw block device andhave Kubernetes automatically format and mount the filesystem. This reduces theprevious burden of having to format and mount devices before giving it toKubernetes.

Limitations of GA

At GA, Local Persistent Volumes do not support dynamic volumeprovisioning.However there is an externalcontrolleravailable to help manage the localPersistentVolume lifecycle for individual disks on your nodes. This includescreating the PersistentVolume objects, cleaning up and reusing disks once theyhave been released by the application.

How to Use a Local Persistent Volume?

Workloads can request a local persistent volume using the samePersistentVolumeClaim interface as remote storage backends. This makes it easyto swap out the storage backend across clusters, clouds, and on-premenvironments.

First, a StorageClass should be created that sets volumeBindingMode:WaitForFirstConsumer to enable volume topology-awarescheduling.This mode instructs Kubernetes to wait to bind a PVC until a Pod using it is scheduled.

Then, the external static provisioner can be configured andrun to create PVsfor all the local disks on your nodes.

Afterwards, workloads can start using the PVs by creating a PVC and Pod or aStatefulSet with volumeClaimTemplates.

Once the StatefulSet is up and running, the PVCs are all bound:

When the disk is no longer needed, the PVC can be deleted. The external static provisionerwill clean up the disk and make the PV available for use again.

You can find full documentationfor the feature on the Kubernetes website.

What Are Suitable Use Cases?

The primary benefit of Local Persistent Volumes over remote persistent storageis performance: local disks usually offer higher IOPS and throughput and lowerlatency compared to remote storage systems.

However, there are important limitations and caveats to consider when usingLocal Persistent Volumes:

- Using local storage ties your application to a specific node, making yourapplication harder to schedule. Applications which use local storage shouldspecify a high priority so that lower priority pods, that don’t require localstorage, can be preempted if necessary.

- If that node or local volume encounters a failure and becomes inaccessible, thenthat pod also becomes inaccessible. Manual intervention, external controllers,or operators may be needed to recover from these situations.

- While most remote storage systems implement synchronous replication, most localdisk offerings do not provide data durability guarantees. Meaning loss of thedisk or node may result in loss of all the data on that disk

For these reasons, local persistent storage should only be considered forworkloads that handle data replication and backup at the application layer, thusmaking the applications resilient to node or data failures and unavailabilitydespite the lack of such guarantees at the individual disk level.

Examples of good workloads include software defined storage systems andreplicated databases. Other types of applications should continue to use highlyavailable, remotely accessible, durable storage.

How Uber Uses Local Storage

M3, Uber’s in-house metrics platform,piloted Local Persistent Volumes at scalein an effort to evaluate M3DB —an open-source, distributed timeseries databasecreated by Uber. One of M3DB’s notable features is its ability to shard itsmetrics into partitions, replicate them by a factor of three, and then evenlydisperse the replicas across separate failure domains.

Prior to the pilot with local persistent volumes, M3DB ran exclusively inUber-managed environments. Over time, internal use cases arose that required theability to run M3DB in environments with fewer dependencies. So the team beganto explore options. As an open-source project, we wanted to provide thecommunity with a way to run M3DB as easily as possible, with an open-sourcestack, while meeting M3DB’s requirements for high throughput, low-latencystorage, and the ability to scale itself out.

The Kubernetes Local Persistent Volume interface, with its high-performance,low-latency guarantees, quickly emerged as the perfect abstraction to build ontop of. With Local Persistent Volumes, individual M3DB instances can comfortablyhandle up to 600k writes per-second. This leaves plenty of headroom for spikeson clusters that typically process a few million metrics per-second.

Because M3DB also gracefully handles losing a single node or volume, the limiteddata durability guarantees of Local Persistent Volumes are not an issue. If anode fails, M3DB finds a suitable replacement and the new node begins streamingdata from its two peers.

Thanks to the Kubernetes scheduler’s intelligent handling of volume topology,M3DB is able to programmatically evenly disperse its replicas across multiplelocal persistent volumes in all available cloud zones, or, in the case ofon-prem clusters, across all available server racks.

Uber’s Operational Experience

As mentioned above, while Local Persistent Volumes provide many benefits, theyalso require careful planning and careful consideration of constraints beforecommitting to them in production. When thinking about our local volume strategyfor M3DB, there were a few things Uber had to consider.

For one, we had to take into account the hardware profiles of the nodes in ourKubernetes cluster. For example, how many local disks would each node clusterhave? How would they be partitioned?

The local static provisioner providesguidanceto help answer these questions. It’s best to be able to dedicate a full disk to each local volume(for IO isolation) and a full partition per-volume (for capacity isolation).This was easier in our cloud environments where we could mix and match localdisks. However, if using local volumes on-prem, hardware constraints may be alimiting factor depending on the number of disks available and theircharacteristics.

When first testing local volumes, we wanted to have a thorough understanding ofthe effectdisruptions(voluntary and involuntary) would have on pods usinglocal storage, and so we began testing some failure scenarios. We found thatwhen a local volume becomes unavailable while the node remains available (suchas when performing maintenance on the disk), a pod using the local volume willbe stuck in a ContainerCreating state until it can mount the volume. If a nodebecomes unavailable, for example if it is removed from the cluster or isdrained,then pods using local volumes on that node are stuck in an Unknown orPending state depending on whether or not the node was removed gracefully.

Recovering pods from these interim states means having to delete the PVC bindingthe pod to its local volume and then delete the pod in order for it to berescheduled (or wait until the node and disk are available again). We took thisinto account when building our operatorfor M3DB, which makes changes to thecluster topology when a pod is rescheduled such that the new one gracefullystreams data from the remaining two peers. Eventually we plan to automate thedeletion and rescheduling process entirely.

Alerts on pod states can help call attention to stuck local volumes, andworkload-specific controllers or operators can remediate them automatically.Because of these constraints, it’s best to exclude nodes with local volumes fromautomatic upgrades or repairs, and in fact some cloud providers explicitlymention this as a best practice.

Portability Between On-Prem and Cloud

Local Volumes played a big role in Uber’s decision to build orchestration forM3DB using Kubernetes, in part because it is a storage abstraction that worksthe same across on-prem and cloud environments. Remote storage solutions havedifferent characteristics across cloud providers, and some users may prefer notto use networked storage at all in their own data centers. On the other hand,local disks are relatively ubiquitous and provide more predictable performancecharacteristics.

By orchestrating M3DB using local disks in the cloud, where it was easier to getup and running with Kubernetes, we gained confidence that we could still use ouroperator to run M3DB in our on-prem environment without any modifications. As wecontinue to work on how we’d run Kubernetes on-prem, having solved such animportant pending question is a big relief.

What’s Next for Local Persistent Volumes?

As we’ve seen with Uber’s M3DB, local persistent volumes have successfully beenused in production environments. As adoption of local persistent volumescontinues to increase, SIG Storage continues to seek feedback for ways toimprove the feature.

One of the most frequent asks has been for a controller that can help withrecovery from failed nodes or disks, which is currently a manual process (orsomething that has to be built into an operator). SIG Storage is investigatingcreating a common controller that can be used by workloads with simple andsimilar recovery processes.

Another popular ask has been to support dynamic provisioning using lvm. This cansimplify disk management, and improve disk utilization. SIG Storage isevaluating the performance tradeoffs for the viability of this feature.

Getting Involved

If you have feedback for this feature or are interested in getting involved withthe design and development, join the Kubernetes StorageSpecial-Interest-Group(SIG). We’re rapidly growing and always welcome new contributors.

Special thanks to all the contributors that helped bring this feature to GA,including Chuqiang Li (lichuqiang), Dhiraj Hedge (dhirajh), Ian Chakeres(ianchakeres), Jan Šafránek (jsafrane), Michelle Au (msau42), Saad Ali(saad-ali), Yecheng Fu (cofyc) and Yuquan Ren (nickrenren).